How to Install and Run Apache Kafka on Windows and Linux

This post explains how to install and start Kafka cluster locally using Kafka Binary on both Windows and Linux .I will also briefly discuss about the Kafka architecture.

What is Kafka

Kafka is a distributed message streaming platform for processing large amounts of data in real-time. Kafka is used for stream processing, analytics, and many other purposes. It is a distributed system, which means it can run on a single machine, or across a cluster of machines. Kafka has been used by thousands of companies in a variety of industries, including web giants like Facebook, Skype, and Netflix.

Apache Kafka was originally developed at Linkedin as a stream processing platform before being open-sourced and now Kafka project is handled by the Apache Software Foundation.

Key Features of Kafka

- Apache Kafka is extremely fast and ensures that there will be zero downtime and no data loss.

- Kafka can readily handle large volumes of data streams and allows users to derive new data streams using previous data streams from the producer.

- Kafka is distributed and fault-tolerant, Kafka is exceptionally reliable. So applications can leverage Kafka in a variety of ways. Furthermore, it provides methods for creating new connections as needed.

- Kafka uses a Distributed Commit Log, ensuring that messages are saved on drives as rapidly as possible. Failures with the databases are handled efficiently by the Kafka cluster.

Kafka Vs Message Queues

In its simplest form, a message queue allows subscribers to pull a message from the end of the queue for processing. A subscriber can pull a single message or a batch of messages at once. Queues typically allow for some transaction, to ensure the message’s desired action was successfully executed, and then the message is removed from the queue entirely.

Unlike a message queue, Kafka enables you to publish messages (or events) to Kafka topics. These messages will not be removed when a consumer retrieves them, making them persistent messages. Kafka lets you replay messages to allow for reactive programming, but more crucially, Kafka lets multiple consumers process different logic based on a single message. This makes Kafka a message broker or a streaming platform.

Kafka Architecture

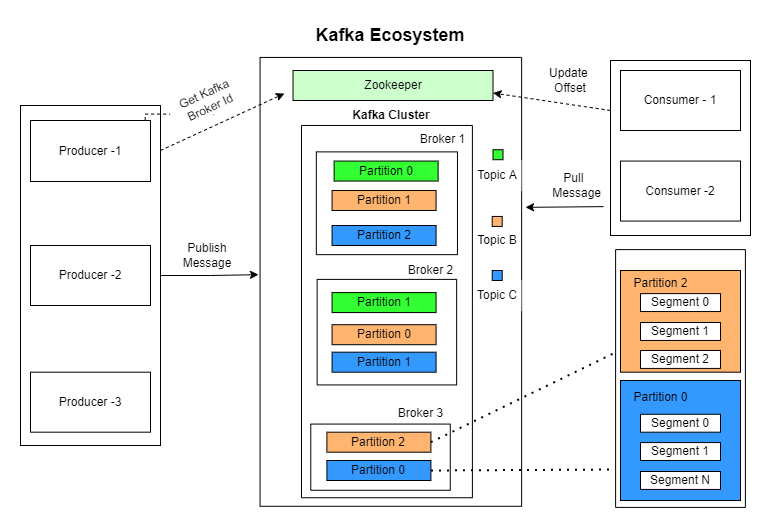

Kafka Ecosystem contains 3 main components

- Producer

- Kafka Cluster

- Consumer

Producer – Program which generates and publish messages to Topic in Kafka cluster.

Kafka Cluster – Manges and stores the messages

Consumer – Program which consumes messages from Topic Kafka cluster.

Let’s take a deeper look into Kafka cluster components

Kafka cluster is made of things called brokers. Each cluster contains one or more brokers. Each broker contains its own local storage to store messages. All the brokers network together to manage Kafka cluster. Brokers contains partitions to store messages for each Topic. Each partition can be further divided into segments.

Topics

The core component of a Kafka system is the Topic. Each Kafka topic can be defined as a logical group of data. A topic is a data store that is partitioned and replicated across multiple Kafka brokers. Each Kafka topic is represented by a Kafka partition, which is a collection of messages. The partitions are distributed across the Kafka cluster and are replicated within the cluster to provide high availability.

Kafka uses zookeeper to manage consensus on the distribution status which all brokers need to agree on.

Zookeeper performs following duties

- Manages cluster

- Detects failures of broker and performs recovery

- Stores secrets and access control lists

Advantages Using Kafka

Producer and consumers are decoupled

Consumption speed of the consumer does not affect the Producer

Adding consumer does not effect the producer

Failure of consumer does not affect the System

Installing Kafka

Download Kafka Binary

You can download Kafka binary from official website.

Once downloaded you need to extract the tar file from tgz file.

Next you need to extract the directory from tar file.

Navigate to the bin directory of Kafka installation directory .

Starting the Kafka cluster

Starting Kafka cluster is 2 step process.

First start the zookeeper server with following command.

If you are using Windows OS, navigate to windows directory inside bin folder.

zookeeper-server-start.bat ..\..\config\zookeeper.properties

zookeeper-server-start.sh ../config/zookeeper.properties

Next you need to start the kafka server.

Open another terminal session and run following command.

kafka-server-start.bat ..\..\config\server.properties

kafka-server-start.sh ../config/server.propertiesCode language: Shell Session (shell)Once all services have successfully launched, you will have a basic Kafka environment running and ready to use.

Creating a Topic to Store Events

kafka-topics.bat --create --topic order-events --bootstrap-server localhost:9092

kafka-topics.sh --create --topic order-events --bootstrap-server localhost:9092

You can view info about created topics by running following command

kafka-topics.bat --describe --topic order-events --bootstrap-server localhost:9092

The output looks like below

D:\kafka_2.13-3.1.0\bin\windows>kafka-topics.bat --describe --topic order-events --bootstrap-server localhost:9092

Topic: order-events TopicId: VpNPYN4cTPWg2UdevRq7eQ PartitionCount: 1 ReplicationFactor: 1 Configs: segment.bytes=1073741824

Topic: order-events Partition: 0 Leader: 0 Replicas: 0 Isr: 0Writing events to the Topic

Running following command will prompt you to enter some messages which will be put into Topic.

Enter required message and .

kafka-console-producer.bat --topic order-events --bootstrap-server localhost:9092 Customer order Grocery basket Customer order no 123, Bread-2, Butter-1

You can press Ctrl + C to stop the process.

Reading events from Topic

Open another terminal session and run the console consumer client to read the events you just created

kafka-console-consumer.bat --topic order-events --from-beginning --bootstrap-server localhost:9092

The output looks like below

D:\kafka_2.13-3.1.0\bin\windows>kafka-console-consumer.bat --topic order-events --from-beginning --bootstrap-server localhost:9092 Customer order Grocery basket Customer order no 123, Bread-2, Butter-1

References